Project 3

Let’s take a look at the Project 3 page and start thinking about ideas for the project. Take a look at some examples on that page or check out some of these projects for ideas:

| name | project |

|---|---|

| Angela Washko | https://angelawashko.com/section/289333-Gaming%20Interventions.html |

| Cory Arcangel | https://coryarcangel.com/things-i-made/ |

| Everest Pipkin | https://everest-pipkin.com/ |

| Ian Cheng | http://iancheng.com/ , emmisaries guide to worlding |

| myhouse.wad | https://www.doomworld.com/forum/topic/134292-myhousewad/ |

| Pippin Barr | https://pippinbarr.com/tags/top-five/ |

| Total Refusal | https://totalrefusal.com/ |

| Zach Gage | http://www.stfj.net/index2.php |

We don’t have much time left in the quarter (2 weeks!) so it’s good to consider the complexity and scope of what you want to do.

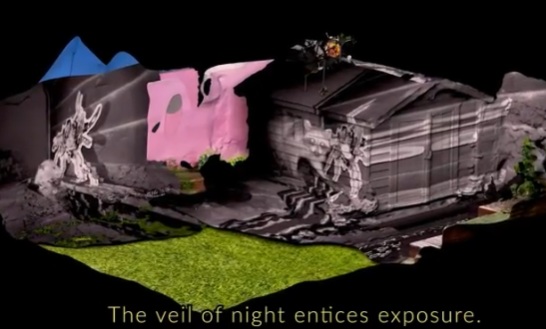

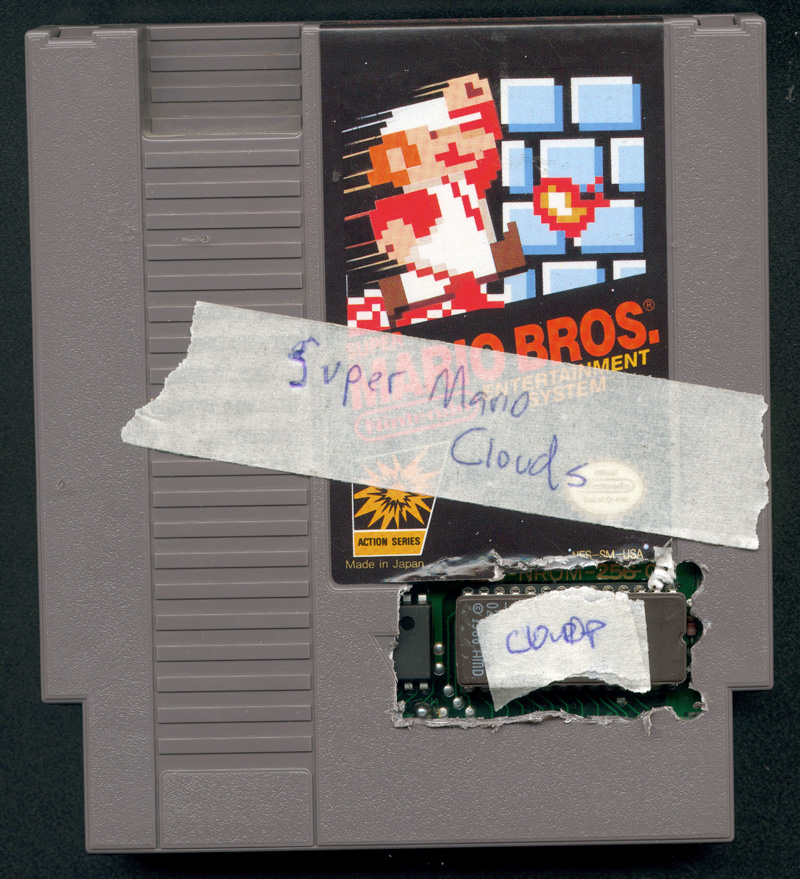

Super Mario Clouds Cory Arcangel (2002)

I have since grown used to programming only because it is the mechanism that seems to make most of the world move. Believe me, if I could order Pizzas by painting, I definitely would paint.

Cory Arcangel

Metagames, remix, sampling, streaming, custom input, mods, machinima

Use Unity and/or other tools (emulators, recording software, existing games) to create a real-time, interactive work where games become the platform for expression.

Recycled Records Christian Marclay (1983) - Physically remixing a “fixed” medium (live perf).

And even though metagames have always existed alongside games, the concept has taken on renewed importance and political urgency in a media landscape in which videogames not only colonize and enclose the very concept of games, play, and leisure but ideologically conflate the creativity, criticality, and craft of play with the act of consumption. When did the term game become synonymous with hardware warranties, packaged products, intellectual property, copyrighted code, end user licenses, and digital rights management? When did rules become conflated with the physical, mechanical, electrical, and computational operations of technical media? When did player become a code word for customer? When did we stop making metagames?

Metagaming: Playing, Competing, Spectating, Cheating, Trading, Making, and Breaking Videogames. Stephanie Boluk and Patrick LeMieux. 2017

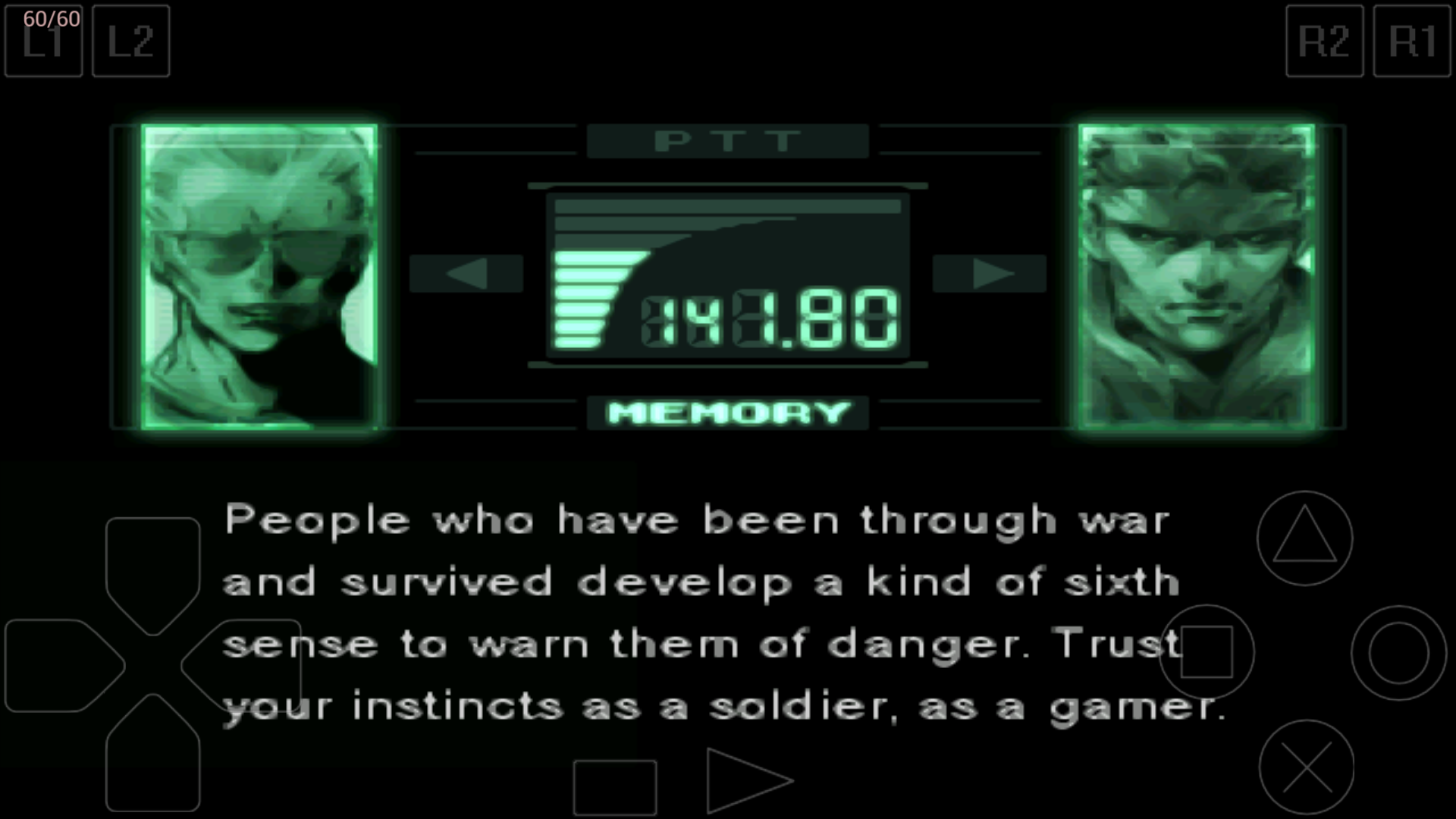

Fourth wall breaking in Metal Gear Solid (1998)

Fourth wall breaking in Metal Gear Solid (1998)

After all, metagames are not just games about games. They are not simply the games we play in, on, around, and through games or before, during, and after games. From the most complex house rules, arcade cultures, competitive tournaments, and virtual economies to the simple decision to press start, pass the controller, use a player’s guide, or even purchase a game in the first place, for all intents and purposes metagames are the only kind of games that we play.

Metagaming. Boluk and LeMieux. 2017

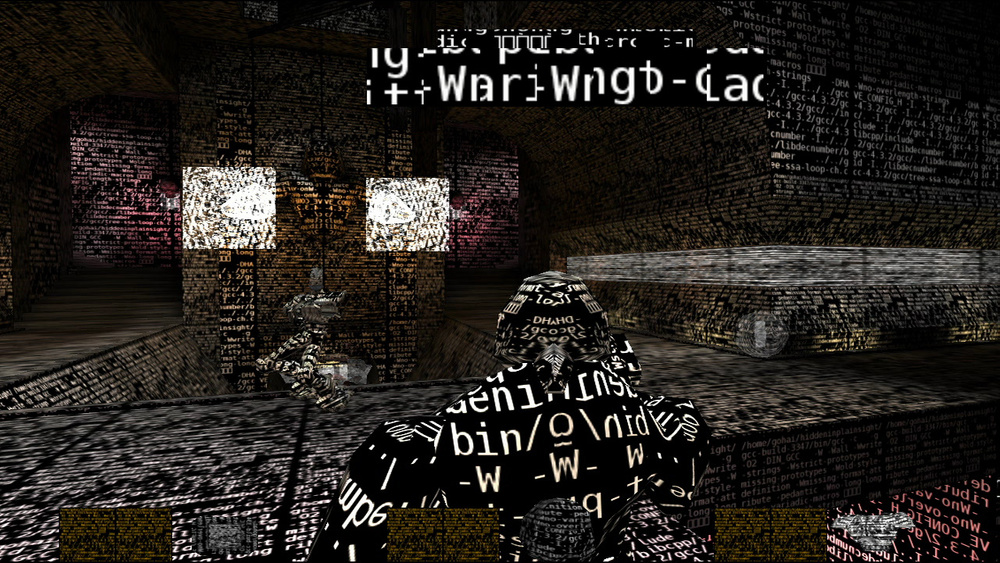

Hidden In Plain Sight. Gottfried Haider (2008) - DMA alum!

What’s really valuable about hacking and modifying games is the realization that there are ways of interacting with games other than just playing them: roles beyond consumer. Inventing rules is, after all, inventing games.

Rise of the Videogame Zinesters Anna Anthropy (2012)

Modern Warfare. Claire L. Evans (2010)

Visual expression and elements of design

Take each of these into consideration when designing the visual features of your project. How are you approaching a particular element? What does it communicate? How does one element relate to other visual, mechanical, textual, sonic, etc. elements of the work?

It can be useful to look online (or use a virtual assistant) on how to achieve the “look” from an existing game in Unity. Try including the term “shader” or “material”, e.g. “zelda shader in Unity”

Line

Horizontal, vertical, diagonal, straight, curved, dotted, broken, thick, thin.

Nice talk about achieving outline effects in the game Roller Drome - https://youtu.be/G1NY0LKDqJo

Color

The wavelength of light. Hue, value, intensity, and temperature.

Shape – 2D, flat, geometric, organic

Form

3D, Geometric, organic

Value

The lightness or darkness of an image

Space

The area around, within, or between images. +/- space. Composition.

Kentucky Route Zero 15:36

Texture

The feel, appearance, thickness, or stickiness of a surface (smooth, rough, silky, furry)

For a ton of insightful opinions and examples – references within and beyond games – definitely check out this blog post on Real Time 3D Imagery

Toon Shaders

Lots of the effects mentioned at the start of the day can be achieved using a technique called “toon shading” that mixes elements of both lit and unlit shading. The example image above is from a free URP toon shading pack called OToon (sadly no longer available via asset store – backup)

Lots of the effects mentioned at the start of the day can be achieved using a technique called “toon shading” that mixes elements of both lit and unlit shading. The example image above is from a free URP toon shading pack called OToon (sadly no longer available via asset store – backup)

Note: When looking for custom assets on the Unity Asset Store (or elsewhere), check to make sure they are compatible with your render pipeline.

Custom Shaders and Shader Graph

URP (and HDRP) include a node-based editor for creating custom shaders, called “Shader Graph”

I won’t be getting into the details of custom shaders for this class. But in some cases there are simple shaders that you can build which aren’t included with URP.

Vertex Color Shader

It is possible to include color data with the vertices of a model, known as vertex painting. For example, the models in the Everything Library do not come with textures.

To display the colors of the models, you need to use a material that uses a shader to convert the vertex colors of the model to the colors. This is pretty easy to make using a shader graph.

You can then create a material that uses this shader graph, and apply it to the material of an object that has vertex colors:

Universal Render Pipeline, Lights, Materials, Shaders

So you may have an idea of how you would like things to look, but how do you design visual elements within Unity?

Post-processing

URP uses Volumes for adding post-processing effects to the scene. When a camera is within a volume, all overrides will be applied to the scene.

- Enable “Post Processing” in the Camera

- Create a Volume Game Object and then create a Profile

- Add in any overrides that you’d like to include in your scene

Mapping Metagames

Consider Google Maps as a Game Engine and take a look at ways at how it’s been leveraged for different purposes:

Pokemon GO. Niantic/Google (2016) – see also Ingress from the same company

Importantly, though, it [Pokemon GO] is less about monitoring where individuals go and more about developing the capacity to direct people where it wants them to move.

The Playstation Dreamworld Alfie Bown (2017)

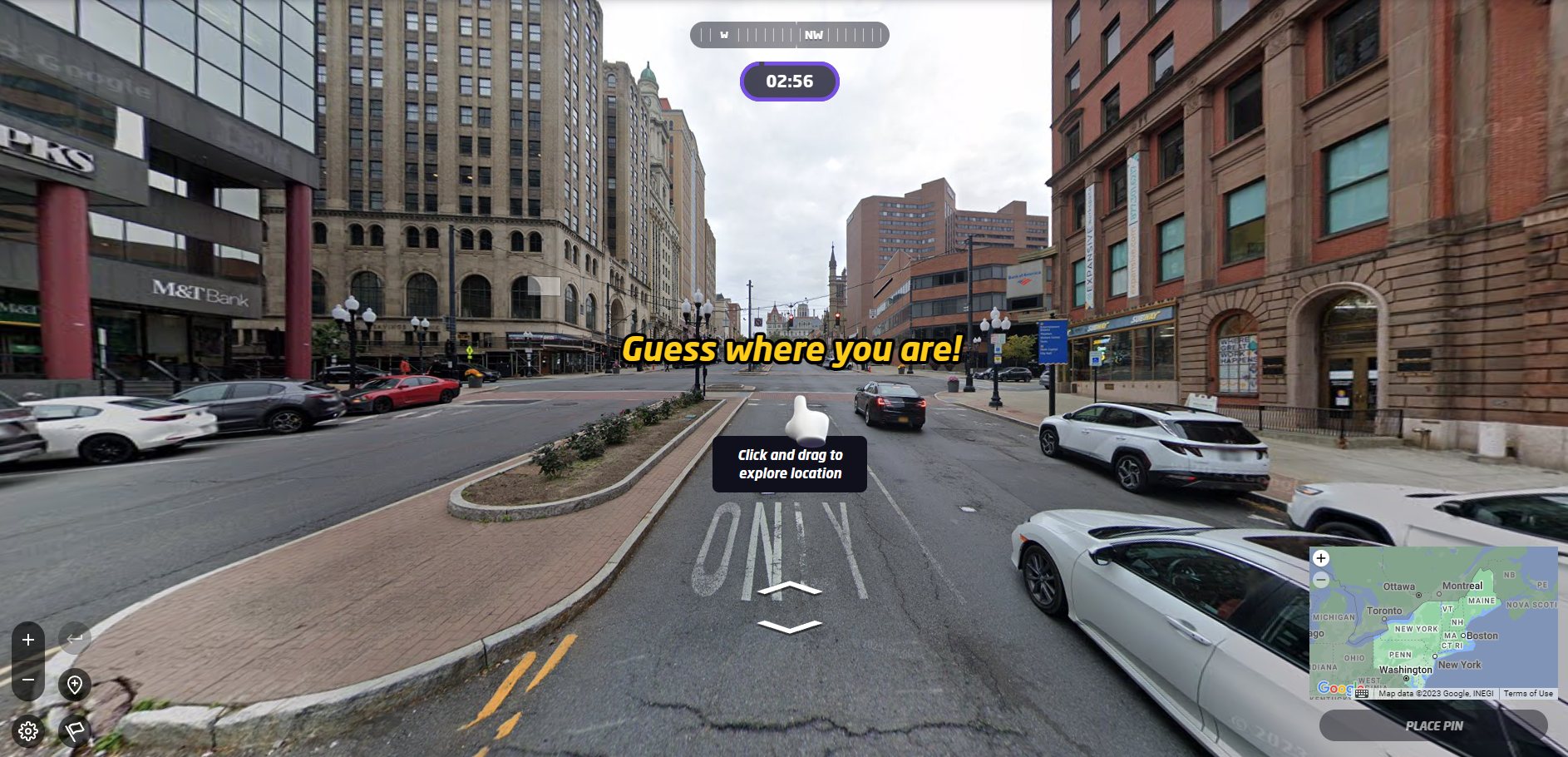

Geoguessr , making a more literal game out of google maps

Geoguessr , making a more literal game out of google maps

Postcards from Google Earth. Clement Valla (2010-ongoing)

… these images are not glitches. They are the absolute logical result of the system

- Clement Valla

GEO GOO. JODI (2008) - approaching google maps as a drawing platform

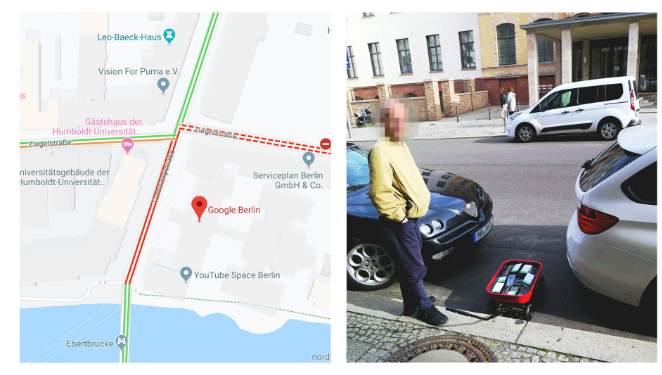

Google Maps Hacks Simon Weckert (2020) – using a wagon full of phones to divert traffic

Prison Map Josh Begley (2012) - Leveraging the availability of data through a platform (google maps) to reflect on aspects of another platform (USA)

Prison Map Josh Begley (2012) - Leveraging the availability of data through a platform (google maps) to reflect on aspects of another platform (USA)

Navigating the Stack

Google maps is just a one node within a larger network of other tools, software, interfaces, devices, infrastructures, etc.

Shifting your focus can bring into light another potential game engine.

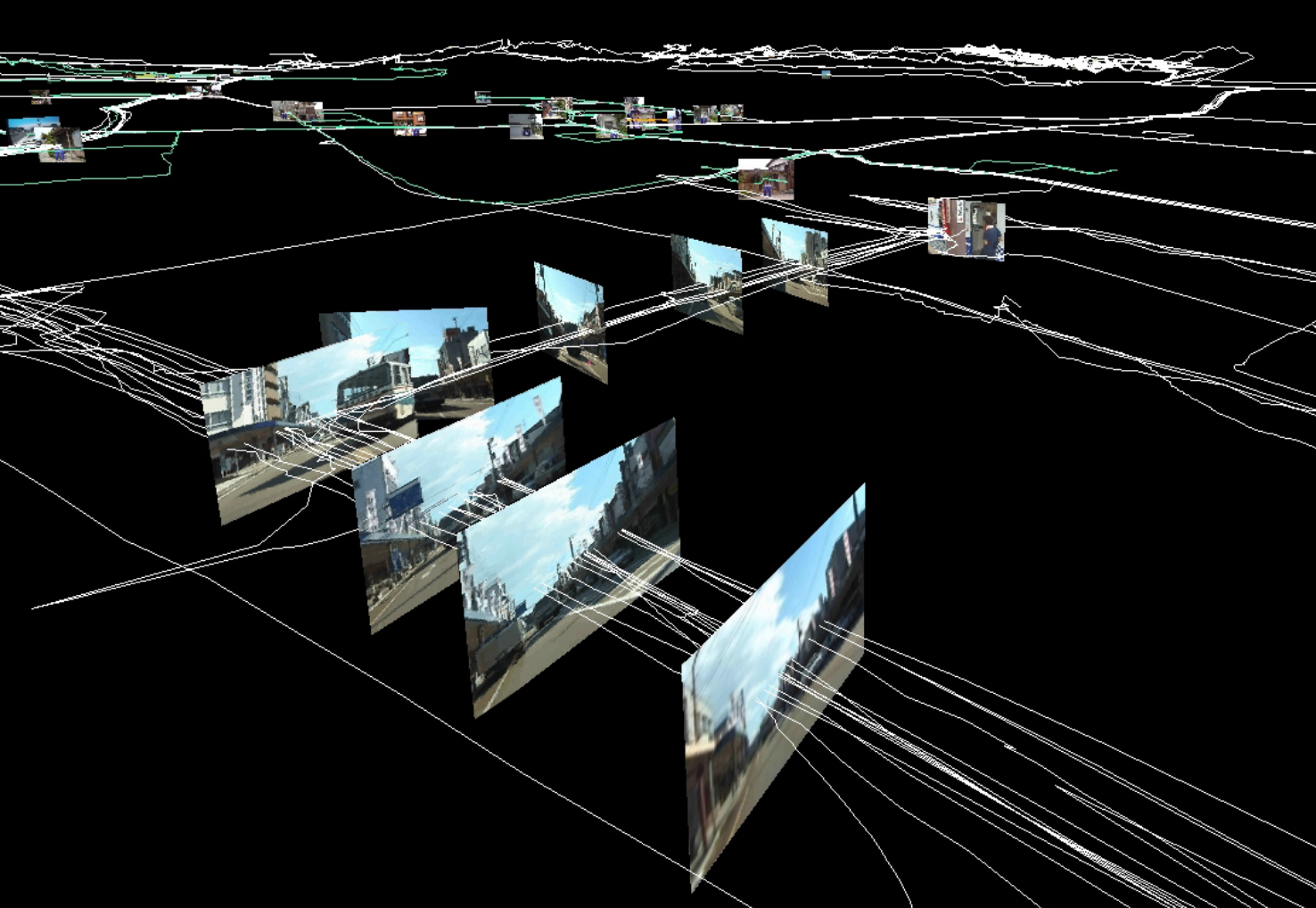

Field Works Masaki Fujuhata (1992 - 2012) – GPS Based work.

Field Works Masaki Fujuhata (1992 - 2012) – GPS Based work.

One layer below. GPS is an underlying technology which Google Maps relies on. Wayfinding using location data sensors becomes itself a tool for exploration.

iPhone Oil Paintings JK Keller (2012)

One layer above. The device used to view google maps (screen, phone, etc.) and our physical incompatibility with with these objects can be a different path.

If you are interested in an object – platforms, software, hardware, relationships – but are unsure about how to approach it as an engine, consider the layers surrounding that object (above, below, and adjacent).

Some Questions to ask:

- What are the inputs? Can I intervene?

- What are the outputs? Can I intervene?

- Can it be combined with something else?

… [metagaming] has become a popularly used and particularly useful label for a diverse form of play, a game design paradigm, and a way of life occurring not only around videogames but around all forms of digital technology.

Metagaming. Boluk and LeMieux. 2017

When playing a game, we don’t really think of ourselves as manipulating and executing code as much as attempting to “play” within the constraints or rules dictated by the designer/programmer and enforced by the computer.

Moving from playing games to designing games, the computation aspect becomes more apparent even while a Game Engine abstracts the low level puttering involved in the staging and display of the game. But as a designer, there is no expectation the that the player could themselves become a programmer and rewrite your own game.

Speedrunning is another form of hacking, but only with in-universe tools.

You can go anywhere you want in gamespace, but you can never leave it.

Gamer Theory. Mackenzie Wark (2007)

Cameras

Knowing a bit more about working with cameras in Unity can be especially useful when it comes to building projects that sample, recombine, or display live, external imagery.

I’ll cover some important tools to know about and a few tricks:

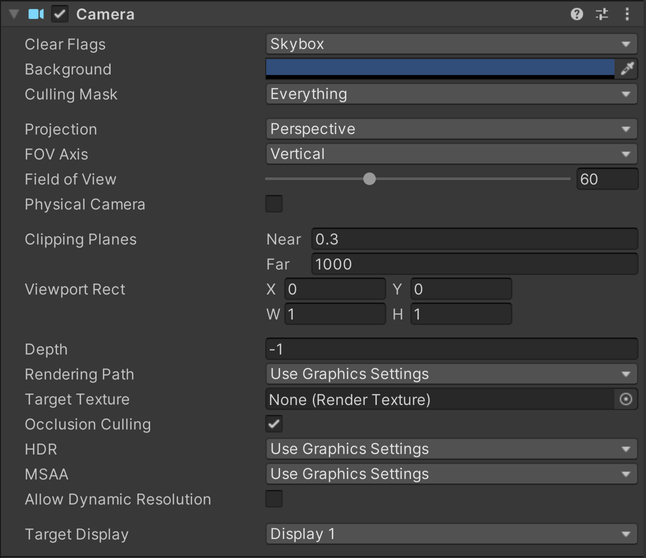

Camera component

A Standard Render Pipeline camera component

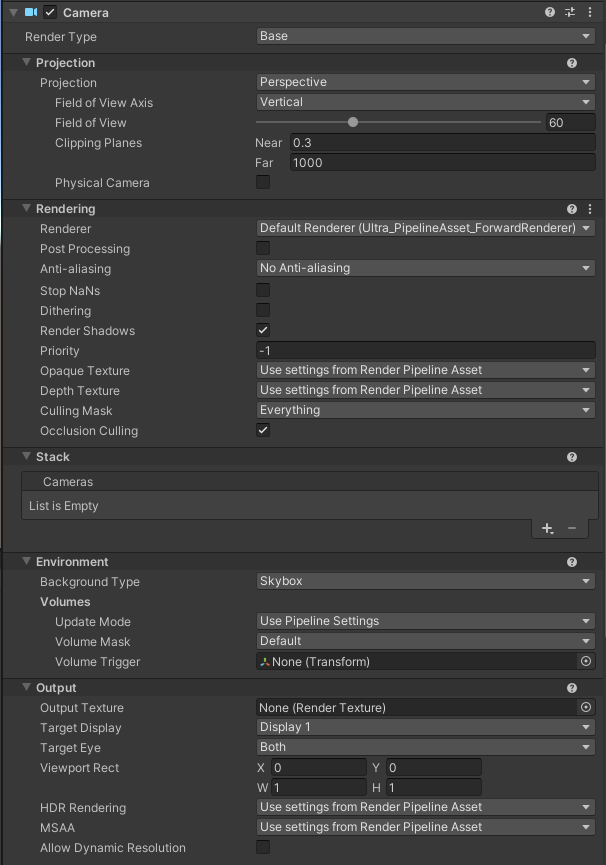

URP Camera component

URP Camera component

The URP camera adds a significant number of options compared to the default camera component. It can be good to glance at the documentation to see what everything does, but some settings are much more commonly used than others:

- Projection: Change camera between Perspective and Orthographic

- Field of view (FOV): How wide (or tall) of an area is visible to the camera. Larger FOV means you can see more.

Hyper Demon is a game that encourages high FOV to keep track of everything around you.

- Clipping planes: What are the closest and farthest distances that a camera can “see”

- Post Processing: Check the box if you want to use post processing effects (global volume)

- Anti-aliasing: Smooths out any “jaggy” lines. Can be heavy on processing

- Culling Mask: Control which layers that the camera can see

- Occlusion Culling: hide objects that the camera can’t see

- Background Type: What is drawn behind everything (like background in p5)

- Output Texture: Important if you want to use the camera with a Render Texture

- Target Display: Control where the camera displays, can use for multi-screen output

- Viewport Rect: Control how much of the camera view is rendered to the screen (useful for split screen)

Some intense aliasing

Render Texture

Camera output isn’t limited to a display. You can add the output to other objects in the scene using a Render Texture

Here’s a short setup step-by-step for connecting a camera output to a material using a render texture:

- In the Project Panel: Create > Render Texture

- By default these are 256x256. It’s a good idea to only increase the texture size based on where it’s being used. If the texture is looking too low-res, try increasing the dimensions.

- In the Hierarchy of your scene, add a new camera: Create > Camera

- Drag the Render Texture to the new Camera’s Output Texture property.

- Create a new Material and drag the Render Texture into the “BaseMap” property (you could also experiment with using the render texture elsewhere).

- Attach the material to an object, plane, UI element (Raw Image)

- Press play and the output of the camera should appear on the object.

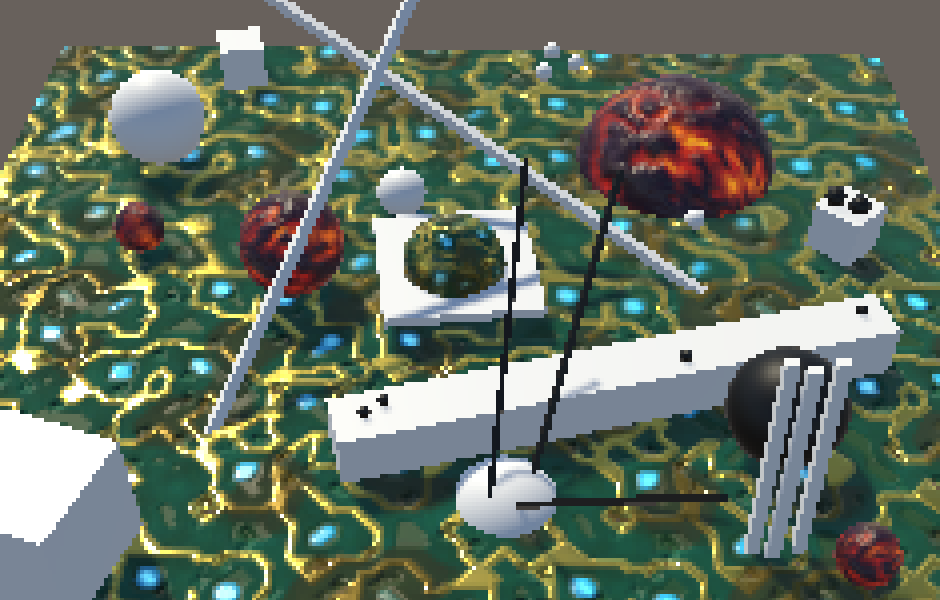

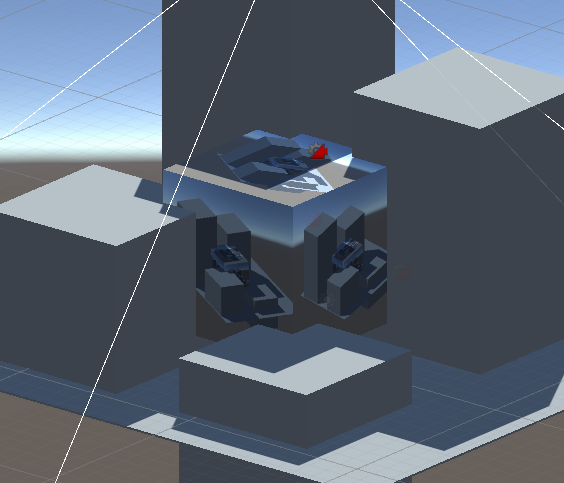

Output of camera attached to a cube using a Render Texture

Webcam input

Getting the input from a webcam uses a slightly different type of texture called a WebCamTexture

The demo script from this page is a fast way to get webcam input into Unity:

// Starts the default camera and assigns the texture to the current renderer

using UnityEngine;

using System.Collections;

public class GetWebCam : MonoBehaviour

{

void Start()

{

WebCamTexture webcamTexture = new WebCamTexture();

Renderer renderer = GetComponent<Renderer>();

renderer.material.mainTexture = webcamTexture;

webcamTexture.Play();

}

}

Attach this script to an object in your scene and it will replace the BaseMap color of the material with the WebCamTexture

Virtual Webcam Input

If I want to route video from another program on my computer, I can create a “Virtual Webcam” to pass the input into Unity using the WebCamTexture.

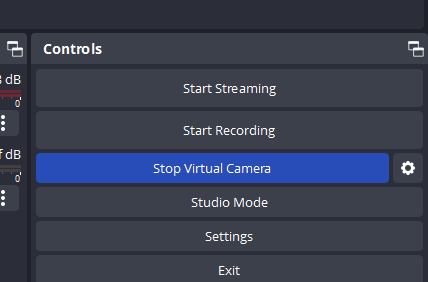

I like to use OBS to capture a screen or window and then start up a Virtual Webcam. In Unity, you’ll need to tell the WebCamTexture to use that device. There are other ways of routing video within a computer (syphon for mac, spout for windows)

In the controls section of OBS you can start/stop the virtual camera:

Because the script above will always pick the first camera, you’ll need to modify it a bit to figure out which camera is the OBS virtual camera:

// Starts the default camera and assigns the texture to the current renderer

using UnityEngine;

using System.Collections;

public class GetWebCam : MonoBehaviour

{

void Start()

{

WebCamDevice[] devices = WebCamTexture.devices;

WebCamTexture webcamTexture = new WebCamTexture();

if (devices.Length > 0)

{

// print names of all connected devices

foreach (var d in devices) print(d.name);

// set the device

webcamTexture.deviceName = devices[0].name;

Renderer renderer = GetComponent<Renderer>();

renderer.material.mainTexture = webcamTexture;

webcamTexture.Play();

}

}

}

If you run the game with the updated script, you’ll see the names of the devices in the console. I can see that OBS is the second device:

You can directly set the device name in the script,

webcamTexture.deviceName = "OBS Virtual Camera";

or I can change the index of the webcam device from 0 to 1

webcamTexture.deviceName = devices[1].name;

Applying the virtual camera input as a texture on an object

Reading Pixels

For grabbing pixels off a Camera or Render Texture see ReadPixels

WebCamTexture has it’s own version of reading pixels called GetPixels

In both cases, you’ll be writing the output to a new texture or material rather than editing the pixels of the texture before it is passed to a display.

Here’s an example of reading the pixel array from a webcam input (extending the previous webcam code from above):

using UnityEngine;

using System.Collections;

public class GetWebCam : MonoBehaviour

{

WebCamTexture webcamTexture;

Color[] data; // use Color32[] if you need better performance

Material averageMaterial;

void Start()

{

WebCamDevice[] devices = WebCamTexture.devices;

webcamTexture = new WebCamTexture();

if (devices.Length > 0)

{

// print names of all connected devices

foreach (var d in devices) print(d.name);

webcamTexture.deviceName = devices[0].name;

// store the material to change later

Renderer renderer = GetComponent<Renderer>();

averageMaterial = renderer.material;

webcamTexture.Play();

}

}

void Update()

{

data = webcamTexture.GetPixels();

// e.g. find the average color

float r = 0f;

float g = 0f;

float b = 0f;

foreach(var col in data)

{

r += col.r;

b += col.b;

g += col.g;

}

int len = data.Length;

Color avgColor = new Color(r/len, g/len, b/len);

// set the color of the material

averageMaterial.SetColor("_BaseColor", avgColor);

}

}

This begins to encroach into the territory of shaders (or shader graphs) – URP has a built-in Fullscreen Shader Graph that can be used to design custom post processing effects.

Decal Projectors

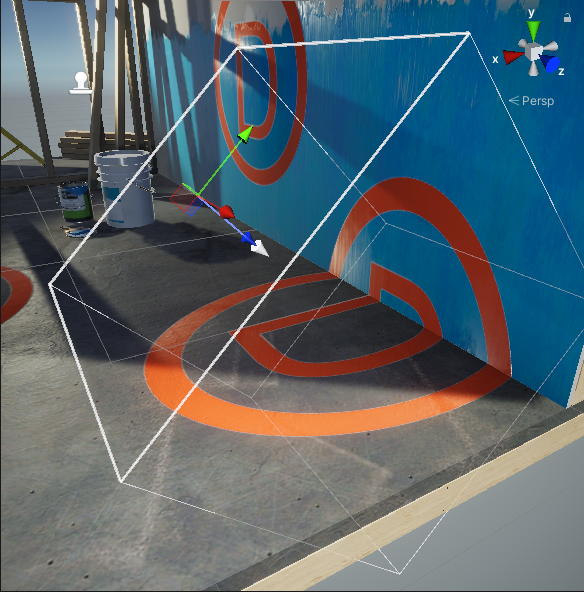

It is not uncommon to add complexity, shadows, markings, logos, etc. to surfaces without wanting to create a custom texture. This can be achieved using a decal projector

For URP, you can add a decal projector to your scene by right-clicking in the hierarchy and selecting Rendering > URP Decal Projector.

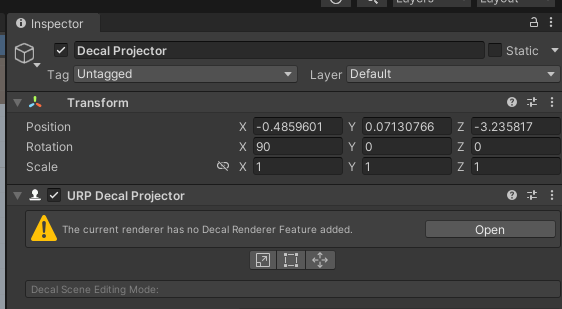

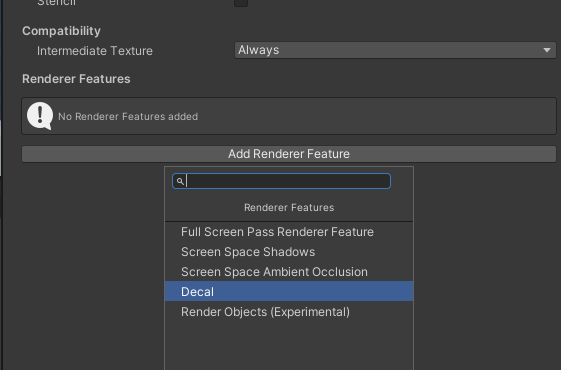

In order to use the decal projector, you’ll need to add the decal projector render feature to your URP settings. Conveniently, Unity tells you that you need to add it and gives you a link:

Click “open” to change the view of the inspector and then find “Add Renderer Feature > Decal”

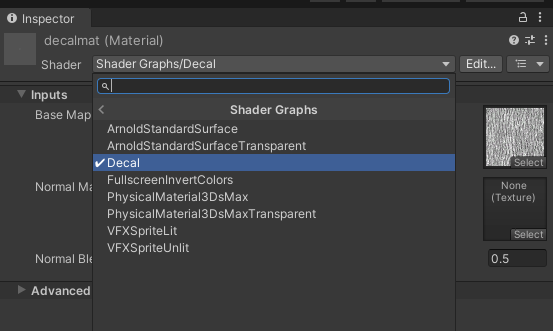

This should reload the scene and the decal projector will be available to use. URP decal projectors use a custom shader found in Shader Graphs/ Decal:

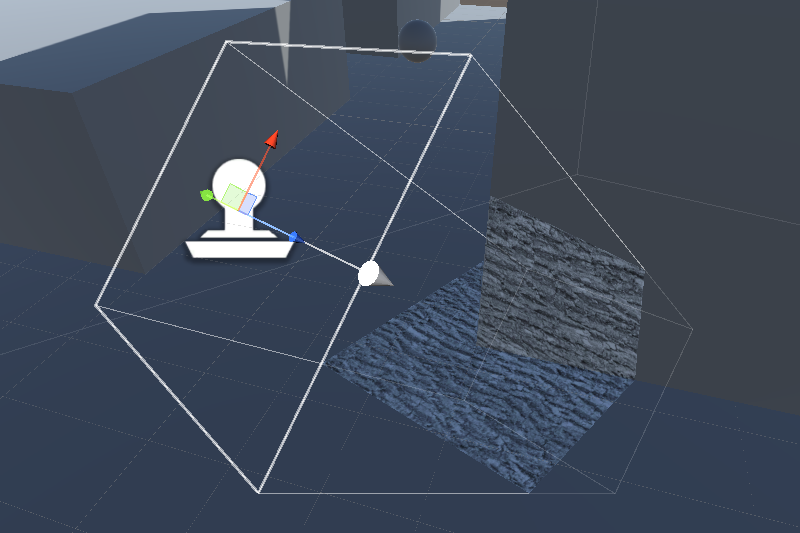

The decal projector, depending on the width, height, and projection depth settings in the component will apply a texture (and/or normal map) onto a surface based on it’s forward z-direction:

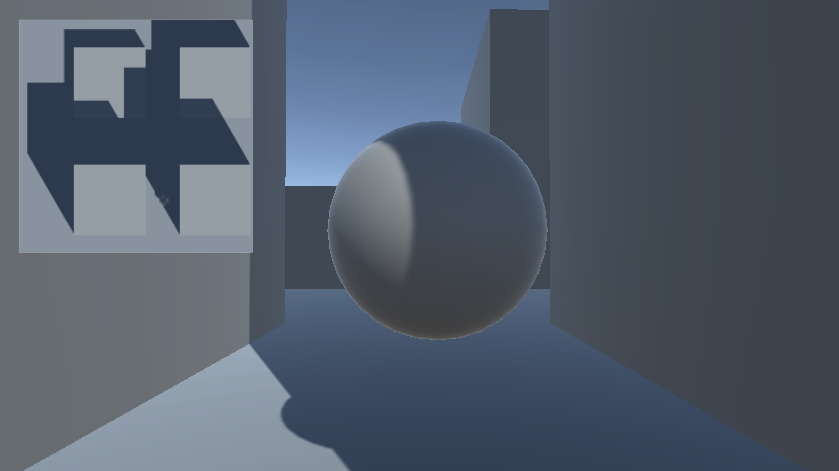

Boring Challenge

Set up a live mini map using an overhead camera, render texture, and a UI Raw Image. For an extra challenge, use culling layers on the cameras to have the character look different on the mini map.

Hint: You need to use an unlit UI material on the RawImage

Better Challenge

Using camera techniques from above, mix together video from inside and outside Unity. Create video feedback loops. Mash up multiple video sources and project them onto 3D objects. Add yourself to the mix with a webcam. Add remote performers from zoom using virtual cameras. Anything can become a source from inside and outside of Unity.

Let’s Paint, Exercise & Blend Drinks - public access television

TV Buddha. Nam June Paik (1974)

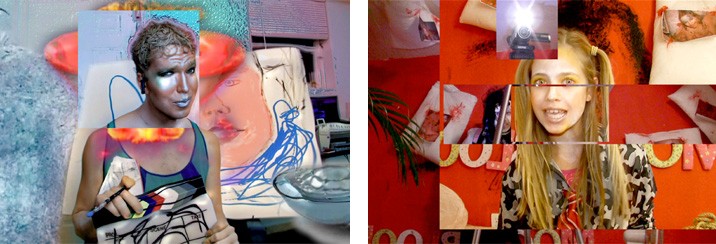

Shana Moulton

<!–

Shana Moulton

<!–

Ryan Trecartin & Lizzie Fitch

–>

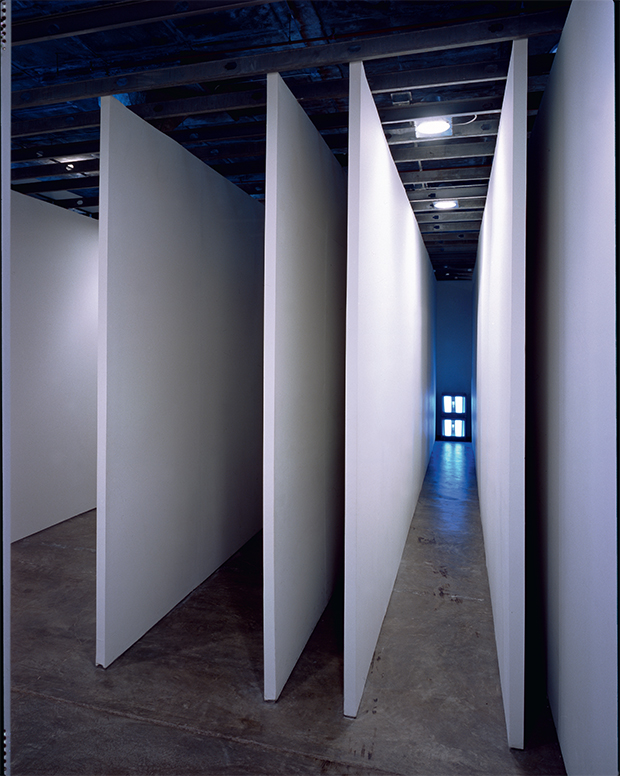

Corridor Installation (Nick Wilder Installation) Bruce Nauman (1970)